Is AI Making Coders Slower Than Before? Short Answer: Yes!

Table of Content

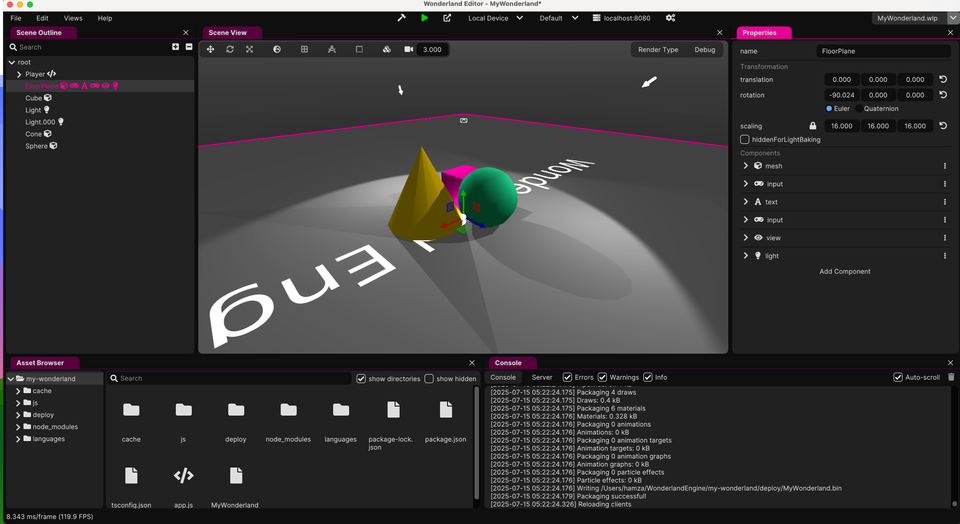

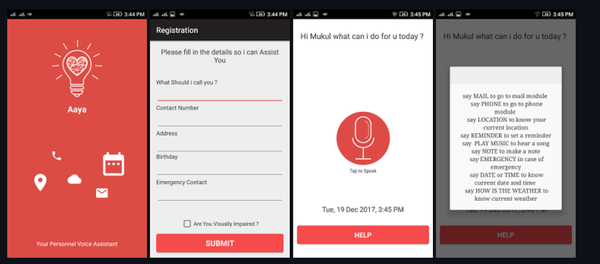

As both a doctor and a developer, I’ve spent years writing code under pressure, whether it’s building VR apps for ADHD treatment or scripting backend logic for immersive web experiences. So when AI coding assistants started popping up everywhere, I was all in.

I tried Cursor , GitHub Copilot , Tabnine , and even some niche tools like Amazon CodeWhisperer . My goal? To speed up my workflow, reduce repetitive tasks, and focus more on the architecture and logic behind my projects.

But here's the thing: it didn’t always work out that way.

In fact, after weeks of using these tools daily, I found myself spending more time reviewing, prompting, and tweaking AI-generated code than I did actually writing it from scratch, And guess what, I’m not alone.

A Surprising Study: AI Made Developers 19% Slower

A recent study by METR (Model Evaluation and Threat Research) tested how experienced open-source developers were affected by AI tools like Cursor Pro and Claude 3.5. The results were eye-opening:

Experienced open-source developers took 19% longer to complete real-world coding tasks when using AI tools compared to when they worked without them.

Even more interesting? The developers believed AI had made them faster, by about 20%, even though the data said otherwise.

The reason?

They spent too much time prompting , reviewing outputs , and waiting for responses , which outweighed any time saved during actual coding.

This matches exactly with what I saw in my own experience.

What I Felt First-Hand

When I used AI tools to generate functions, especially in complex projects involving WebGL rendering or physics-based interactions (like in my VR apps), the suggestions often looked good at first glance but needed heavy modifications.

Sometimes, the generated code would:

- Miss critical edge cases

- Introduce subtle bugs

- Fail to follow project-specific patterns or style guides

- Require manual linting or formatting

And each time, I found myself going back and forth between the prompt, the output, and the codebase, trying to "teach" the AI what I wanted instead of just writing the code directly.

It felt like I was stuck in a feedback loop, and honestly, it was exhausting.

So… Should We Stop Using AI for Coding?

Absolutely not.

Like many things in tech (and medicine), the key is how you use it .

Here are some strategies I’ve developed over time to use AI more wisely in my development workflow:

1. Use AI for Simple, Repetitive Tasks

AI shines when the task is clear-cut , well-defined , and doesn’t require deep domain knowledge. For example:

- Writing boilerplate code (e.g., React components, Redux slices)

- Generating unit test templates

- Converting JSON to TypeScript interfaces

- Building simple CLI scripts

These are areas where AI can save time without dragging you into endless reviews.

2. Don’t Trust the Output, Review Ruthlessly

Treat AI-generated code like a junior developer who’s eager but inexperienced. Always review it line-by-line.

Use your IDE's diff tools, run tests, and check for security issues before merging anything. This isn’t a shortcut, it’s an extra layer of quality control.

3. Fine-Tune Prompts for Your Context

The better your prompts, the better the results. Include:

- File context

- Project structure

- Desired behavior

- Constraints (e.g., performance, style)

Instead of asking "Write me a function to sort this array," try:

“Given this object schema and sorting rules, write a TypeScript function that sorts users by name and date of birth, handling nulls and case-insensitive names.”

You’ll get cleaner, more usable code.

4. Know When to Walk Away

If you find yourself modifying the same AI suggestion three times and still not getting it right, stop. Just write the damn code yourself. Your time is valuable. If AI isn’t helping, it’s slowing you down, and the METR study proves that’s a very real risk.

In the end!

AI coding assistants like Cursor , GitHub Copilot , and Claude, are powerful tools, but they’re not magic. Used correctly, they can boost productivity. Used carelessly, they become time sinks.

From my dual lens as a doctor and a developer , I see AI in coding like I see automation in healthcare: promising, but only effective when applied thoughtfully within the right context.

So don’t rush to adopt every new tool. Be selective. Be critical. And most importantly, be aware of the hidden cost of time, the one thing none of us can get back.

Until AI gets better at understanding large, complex codebases, and until latency and reliability improve I'll be using it like a scalpel: precisely, intentionally, and only when it makes sense.

Resources

- Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity

- Study finds AI tools made open source software developers 19 percent slower