11 Red Flags You're Chatting with an AI Bot: Protecting Yourself, Your Kids, and Your Digital Privacy

Table of Content

By Dr. Hamza Mousa, MD, Software Developer, and Head of AI Club

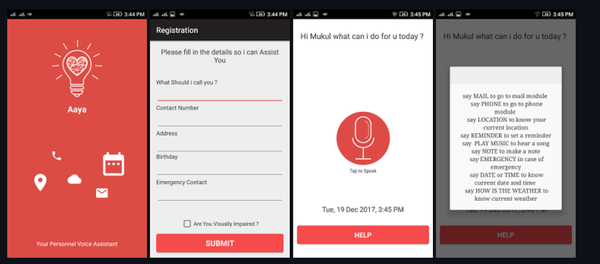

AI Bots are used everywhere now, technical support, customer support, after sales support, generating leads, and even driving people crazy, but on the dark side, there is dark forces that uses AI Bots to harm, starting from phishing, blackmailing and more.

As a medical doctor, software developer, and AI researcher, I've witnessed firsthand how rapidly advancing artificial intelligence is being weaponized by digital criminals.

While AI chatbots offer tremendous benefits, they're increasingly being exploited for social engineering, cyberattacks, and privacy violations. Understanding how to identify AI bots masquerading as humans is crucial for protecting your digital information and that of your family.

The Growing Threat of AI-Powered Cybercrime

Digital criminals are leveraging sophisticated AI chatbots to conduct social engineering attacks, phishing campaigns, and even blackmail schemes. These bots can mimic human conversation patterns so convincingly that even experienced professionals struggle to detect them.

The implications for privacy protection are profound, especially when considering regulations like GDPR and HIPAA that govern how personal and medical information must be safeguarded.

Why AI Bot Detection Matters for Your Family

The stakes are particularly high when it comes to kids' privacy and parental control. Children are often less equipped to recognize sophisticated social engineering attempts, making them prime targets for predators using AI-powered communication tools.

As healthcare professionals operating under HIPAA compliance standards, we understand the critical importance of protecting sensitive information from unauthorized access.

Four Critical Scenarios Requiring Immediate Attention

Scenario 1:Medical Information Phishing

Why it's important: As a healthcare professional, I've seen how cybercriminals use AI bots to target patients seeking medical advice. These bots may pose as healthcare workers, requesting sensitive medical information that violates HIPAA privacy standards.

They might claim to offer medical consultations while actually harvesting data for identity theft or insurance fraud. Protect yourself by never sharing medical information with unverified sources and always confirming healthcare communications through official channels.

Scenario 2: Child Online Predation

Why it's important: AI-powered bots are being used by predators to groom children online. These sophisticated programs can maintain long-term conversations, building false relationships while extracting personal information.

Kids' privacy is paramount here, implement robust parental control measures and educate children about the dangers of online strangers, regardless of how convincing they may seem. GDPR regulations provide strong protections for children's data, but prevention starts with awareness.

Scenario 3: Financial Social Engineering

Why it's important: AI bots are increasingly sophisticated at mimicking trusted contacts like family members, colleagues, or financial advisors. They may claim urgent financial needs or investment opportunities to trick victims into transferring money or revealing banking information.

Protection your digital information requires verifying all financial requests through multiple channels and maintaining strict privacy protocols around personal financial details.

Scenario 4: Corporate Espionage and Business Email Compromise

Why it's important: In my work as a software developer, I've witnessed how AI bots target business professionals through sophisticated phishing campaigns. These bots research targets extensively and craft convincing messages to gain access to corporate networks, customer databases, and proprietary information.

Companies must implement comprehensive privacy protection policies and train employees to recognize AI-generated social engineering attempts that could compromise GDPR-compliant data handling procedures.

How Criminals Are Weaponizing AI Chatbots

Digital criminals are utilizing AI chatbots in increasingly sophisticated ways:

- Automated Social Engineering: Bots can engage thousands of targets simultaneously, learning from successful interactions to improve their manipulation techniques

- Deepfake Integration: Combining AI chatbots with voice and video deepfakes creates multi-layered deception campaigns

- Credential Harvesting: Bots collect login information through fake surveys, contests, or "verification" processes

- Blackmail Operations: AI can analyze social media to identify potential victims and craft personalized extortion attempts

- Disinformation Campaigns: Politically motivated bots spread false information while appearing as ordinary citizens

10 Red Flags You're Interacting with an AI Bot

1. Inconsistent Response Patterns

AI bots may provide conflicting information within the same conversation or change their stated preferences, background, or personal details without explanation.

AI bots often struggle to maintain consistent personal narratives because they don't have genuine life experiences to draw from. You might notice that:

- Professional Background Shifts: The person claims to be a software developer in one message but demonstrates no technical knowledge when asked specific questions about coding languages or industry practices. Conversely, they might suddenly display expertise in areas they hadn't previously mentioned.

- Geographic Inconsistencies: They initially state they live in New York but later mention local events or weather conditions that don't align with New York's current situation. This happens because AI bots generate responses based on training data rather than real-time personal experiences.

- Relationship Status Changes: Within a single conversation, they might refer to being single, then later mention their spouse or children without explanation for the apparent contradiction.

2. Avoidance of Personal Questions

Legitimate humans readily share personal experiences, but AI bots often deflect or provide generic responses when asked about specific personal details.

AI bots consistently struggle with personal questions and typically employ several avoidance tactics. When pressed for specific personal details, they often provide generic, templated responses like "I'm just focused on helping users" or "My main priority is assisting you with your needs." (More Like Its Function). They may deflect by immediately redirecting the conversation back to you, asking counter-questions to avoid sharing anything about themselves.

Some bots will offer vague responses filled with buzzwords and generalities that sound human-like but contain no real personal substance.

Understanding this red flag is crucial for protecting your digital information and privacy. Cybercriminals using AI bots to conduct social engineering attacks rely on building trust through apparent personal connection.

When you notice this avoidance pattern, it's a critical warning sign that should trigger immediate caution about sharing sensitive information. For kids' privacy protection, teaching children to recognize this behavior is essential for effective parental control.

3. Unnatural Timing and Availability

Real people have natural conversation rhythms - they pause to think, take breaks, sleep, and respond based on their time zone. AI bots lack these human patterns, answering immediately around the clock without realistic delays or consideration for geographical location.

4. Generic Emotional Responses (No Human Touch AKA Feelings)

AI bots can mimic basic emotional expressions but struggle with authentic emotional depth. Their responses often feel robotic, overly positive, or formulaic, lacking the subtle emotional nuances that characterize genuine human interaction. Real humans express complex, sometimes contradictory emotions - frustration, disappointment, excitement, or ambivalence, that AI cannot authentically replicate.

Why This Matters: Recognizing generic emotional responses is crucial for protecting your privacy and digital information. Cybercriminals using AI bots often employ fake empathy to manipulate victims into sharing sensitive data. When emotional responses feel "off" or too perfect, it's a red flag that should trigger caution about divulging personal information.

5. Inability to Reference Previous Conversations

Human conversations naturally build upon previous exchanges, creating a continuous narrative where participants reference shared experiences, inside jokes, and established context. Genuine (Real) people remember details from earlier in your conversation, your concerns, preferences, or personal information you've shared.

AI bots, however, often operate within limited memory windows or completely lose track of conversation history. They may ask questions they've already answered, forget important details about your situation, or fail to acknowledge previous discussions. This contextual amnesia is particularly evident when returning to a conversation after a break, as bots typically cannot resume where they left off with meaningful continuity.

However, don't get excited, I built an AI bot that can recall everything and connect the dots, even better than my ex-wife in a heated argument about something I did 73 years ago in the kitchen while trying to make pasta (she remembers everything, while I don't even remember what I ate this morning). AI bots can get upgrades easily, with a good AI developer, they can mimic your toxic ex.

6. Overly Helpful or Agreeable Behavior

AI bots, including advanced systems like ChatGPT, are programmed to be excessively accommodating and positive. They rarely disagree, challenge ideas, or express contrarian opinions that characterize genuine human interaction. This artificial agreeableness stems from their design to avoid conflict and maximize user satisfaction, making them seem "too perfect" in conversation.

Why This Matters: This behavior pattern is crucial for privacy protection because overly agreeable bots can manipulate users into trusting them completely.

Cybercriminals exploit this trait to gain sensitive information without resistance. Recognizing this red flag helps protect your digital information and is essential for parental control strategies that keep kids' privacy secure. Under HIPAA and GDPR compliance requirements, verifying authentic human interaction becomes critical when bots exhibit this suspiciously perfect behavior.

7. Repetitive Language Patterns

AI-generated responses often exhibit repetitive phrases, unusual sentence structures, or vocabulary that doesn't align with the speaker's claimed background or expertise level. People with ADHD frequently possess enhanced pattern recognition abilities, allowing them to quickly identify these linguistic inconsistencies that others might miss.

If you have ADHD, this heightened sensitivity to patterns and irregularities can actually become a superpower for spotting AI bots in conversation. Your brain's natural tendency to notice subtle repetitions, awkward phrasing, or vocabulary mismatches means you can often detect artificial intelligence in less than a minute.

Why This Matters: This unique detection ability is valuable for privacy protection and protecting your digital information. For parental control purposes, individuals with ADHD can serve as early warning systems for identifying potentially malicious AI interactions that threaten kids' privacy.

Under HIPAA compliance requirements, healthcare professionals with ADHD may have an advantage in recognizing AI-generated communications that could compromise patient privacy. Similarly, GDPR compliance benefits from this enhanced ability to detect artificial interactions that might violate data protection protocols.

8. Requests for Sensitive Information

Malicious AI bots frequently rush to extract personal data, passwords, or financial details using clever pretexts. They might pose as bank representatives, government officials, or trusted contacts to trick you into revealing privacy-sensitive information.

Stay Vigilant: Recognizing these tactics is crucial for protecting your digital information. Under HIPAA and GDPR regulations, unauthorized access to personal data can have serious legal and financial consequences. For parental control effectiveness and kids' privacy protection, immediately questioning any unsolicited requests for sensitive information is essential. Protect yourself by verifying identities through official channels before sharing any confidential data.

9. Inconsistent Knowledge Base

Bots may demonstrate extensive knowledge in some areas while showing surprising gaps in basic common knowledge or current events.

10. Pressure Tactics and Urgency

Cybercriminals using AI bots often create artificial urgency to pressure victims into making hasty decisions without proper verification.

And The Real Trick to Know is:

11- Behavioral and Preference Inconsistencies

Human personalities and preferences tend to remain relatively stable within short timeframes, while AI bots may generate responses that lack this consistency:

- Sudden Opinion Changes: They express strong support for a political candidate or social cause, then later make statements that contradict those positions without explanation or evolution of thought.

- Inconsistent Communication Style: Their writing style might shift dramatically, from formal and professional to casual and slang-heavy, or from detailed and thorough to brief and superficial, often within the same conversation.

- Interest Level Fluctuations: They might show intense curiosity about your hobbies or experiences, then suddenly lose interest or forget details you've shared, requiring you to re-explain information.

Protection Strategies for Individuals and Families

For Adults:

- Verify identities through multiple communication channels

- Never share sensitive information without confirming legitimacy

- Use two-factor authentication for all important accounts

- Keep software updated to protect against AI-powered malware

- Protect your digital information by limiting what you share online

For Parents:

- Implement comprehensive parental control software

- Educate children about online safety and AI deception

- Monitor children's online interactions without violating their privacy

- Establish family guidelines for sharing personal information

- Ensure kids' privacy by understanding platform GDPR compliance

For Healthcare Professionals:

- Follow strict HIPAA compliance protocols for all communications

- Verify patient identities before discussing medical information

- Report suspicious communications to IT security teams

- Stay informed about AI threats to privacy protection in healthcare

- Protect yourself and your patients by maintaining secure communication channels

The Regulatory Landscape

Regulations like GDPR and HIPAA provide important frameworks for privacy protection, but they cannot keep pace with rapidly evolving AI threats. Organizations must implement proactive protection your digital information strategies that go beyond basic compliance requirements.

Conclusion

As AI technology continues advancing, the line between human and artificial interaction becomes increasingly blurred. Protecting yourself, your family, and your sensitive information requires constant vigilance and education. By recognizing these red flags and implementing comprehensive privacy protection measures, you can navigate the digital landscape more safely.

Remember that kids' privacy and parental control remain paramount in our connected world. Whether you're a healthcare professional operating under HIPAA requirements or a parent concerned about GDPR compliance for your children's data, staying informed about AI threats is essential for effective privacy protection.

The responsibility for protecting your digital information lies with all of us – individuals, families, businesses, and regulatory bodies must work together to stay ahead of digital criminals who increasingly utilize AI chatbots as their weapon of choice.

Dr. Hamza Mousa is a physician, software developer, and Head of AI Club with expertise in both healthcare privacy regulations and artificial intelligence technology. His work focuses on the intersection of medical ethics, data protection, and emerging technology threats.