The New Wave of AI Tools and Self-Diagnosis: Why You Should Never Post Symptoms to AI for an Accurate Diagnosis Ask Explain

Table of Content

As someone who wears multiple hats—doctor, developer, and head of an AI club—I’ve seen firsthand how the rise of artificial intelligence is reshaping healthcare. It’s exciting, no doubt. AI has incredible potential to assist doctors, streamline workflows, and even help patients better understand their health.

But there’s a dark side that keeps me up at night: people posting their symptoms online to AI tools and expecting accurate diagnoses.

Let me tell you why this trend scares me—and why it should scare you too.

A Real-Case Scenario That Stuck With Me

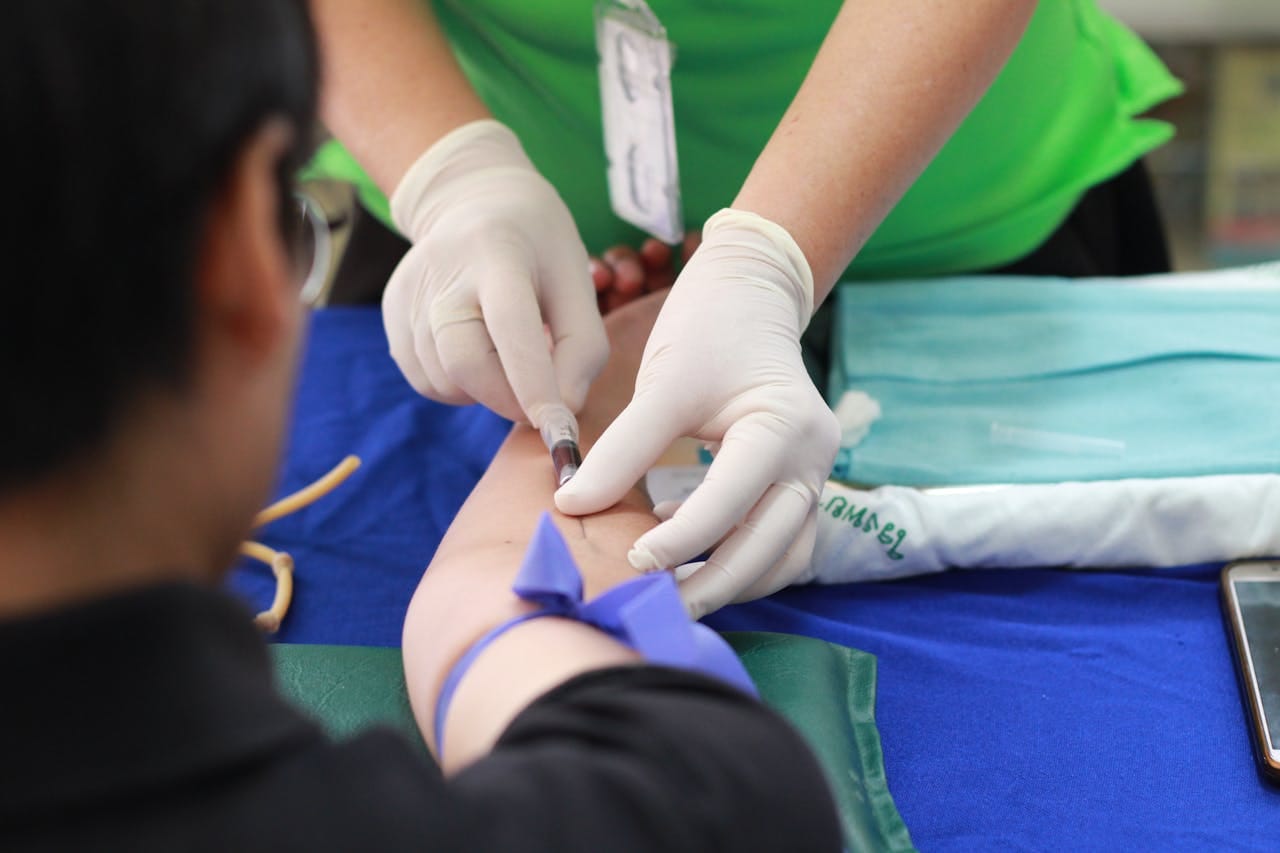

Last year, I was working late in my clinic when a young woman walked in looking pale and anxious. She told me she’d been using an app (one of those symptom-checkers powered by AI) because she didn’t want to bother her doctor over something “minor.” The app had asked her about fatigue, headaches, and occasional dizziness. After answering a few questions, it suggested she might have chronic fatigue syndrome or maybe just stress. No big deal, right?

Wrong.

When I examined her, I noticed her blood pressure was alarmingly low. A quick lab test revealed severe anemia caused by undiagnosed internal bleeding. If she hadn’t come in when she did, things could have gotten much worse. This isn’t just one isolated case—it happens more often than you’d think.

The problem? AI systems are only as good as the data they’re trained on, and they lack the nuanced judgment that comes from years of medical training and experience. They can’t see your face, hear your tone, or feel your pulse. And let’s be honest—they’re not designed to replace doctors; they’re meant to support them.

Why AI Isn’t Ready for Prime Time Diagnosis

Here’s the thing: building an AI model for diagnosing illnesses is insanely complex. As a developer, I know all too well how tricky it is to create algorithms that account for every possible variable. Let me break it down:

- Garbage In, Garbage Out: Most AI models rely on massive datasets. But if those datasets aren’t diverse enough—or if they contain errors—the results will be flawed. For example, many existing models are biased toward certain demographics, which means they may miss conditions that present differently in others.

- Lack of Context: When a patient describes their symptoms to me, I don’t just listen to what they say—I pay attention to how they say it. Are they nervous? Overly concerned? Trying to downplay pain? These subtle cues matter, but AI doesn’t pick up on them.

- Overconfidence in Technology: People trust technology too much sometimes. During a meeting with our AI club, we tested several popular symptom-checker apps. One member joked about having chest pain and shortness of breath. Guess what? The app confidently diagnosed him with acid reflux. Spoiler alert: He didn’t actually have heart issues, but imagine if he did!

- False Sense of Security: Even when AI gets it right, users might misinterpret the information. A friend once showed me a chatbot diagnosis saying she likely had seasonal allergies. She stopped worrying… until weeks later, she ended up hospitalized with pneumonia. Turns out, her “allergies” were masking a serious infection.

But What About the Good Stuff?

Don’t get me wrong—I’m not anti-AI. Far from it. There are plenty of ways AI can enhance healthcare without replacing human expertise:

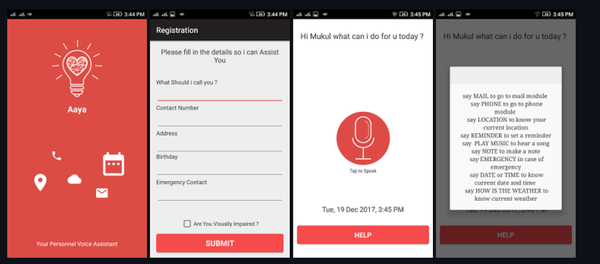

- Triage Support: AI can help prioritize cases based on urgency. For instance, if someone reports sudden vision loss or difficulty speaking, an AI system could flag it as potentially life-threatening and recommend immediate care.

- Patient Education: Chatbots can provide reliable information about common conditions, medications, or lifestyle changes. Think of them as digital assistants, not decision-makers.

- Research and Analysis: As a researcher, I love how AI can analyze mountains of data to uncover patterns we might miss. For example, machine learning helped identify early predictors of diabetic complications in a recent study I worked on.

The key is knowing where AI excels—and where it falls short.

My Personal Takeaway

If you take anything away from this post, let it be this: AI is a tool, not a doctor.

As both a physician and a techie, I believe wholeheartedly in the power of innovation. But I also know that medicine is deeply personal. Every patient is unique, and no algorithm can fully capture the complexity of human biology—or the art of compassionate care.

So next time you’re tempted to type your symptoms into an AI chatbot, pause for a second. Ask yourself: Would I trust this machine with my life? Because that’s exactly what you’re doing when you rely on it for medical advice.

Instead, use these tools responsibly. Treat them like a starting point—not the final word. And always, always follow up with a real, live healthcare professional.

Final Thoughts

We’re living through a fascinating era where technology meets medicine. But let’s not lose sight of what truly matters: people. Machines can crunch numbers and spit out probabilities, but they can’t hold your hand during a tough diagnosis or celebrate your recovery.

So here’s my plea: Use AI wisely, stay curious, and never underestimate the value of human connection in healthcare. Your health—and your life—depend on it.