AI Said: It Was Fine, Then a Human Died” (Why You Should Never Self-Diagnose)

Table of Content

Hey. I’m Dr. Hmza, a medical doctor, software engineer, and someone who’s been building and thinking about AI for years. I’ve written extensively about the ethical use of artificial intelligence in healthcare. And one thing keeps coming up again and again:

AI is not your doctor.

Not even close.

And yet, people are typing symptoms into chatbots, getting diagnoses back, and making life-altering decisions based on those answers.

Let me tell you why that’s dangerous, and why it might be even more dangerous than Googling your symptoms.

The Illusion of Expertise

One of the scariest things about AI is how confident it sounds. It doesn’t stutter. It doesn’t say “I’m not sure.” It gives you an answer, fast, clear, and convincing.

That’s great when you're asking how to change a tire or what time zone Tokyo is in.

But when you type “I have chest pain” or “my stomach hurts,” and the AI says “you probably have gastritis,” that’s not a diagnosis. That’s a guess based on patterns in data, not your body, not your history, not your context.

You wouldn’t trust a mechanic who never saw your car to fix its engine, right?

Then why trust an AI with no access to your real-time vitals, family history, or physical exam?

AI Is Fast, But Medicine Isn’t

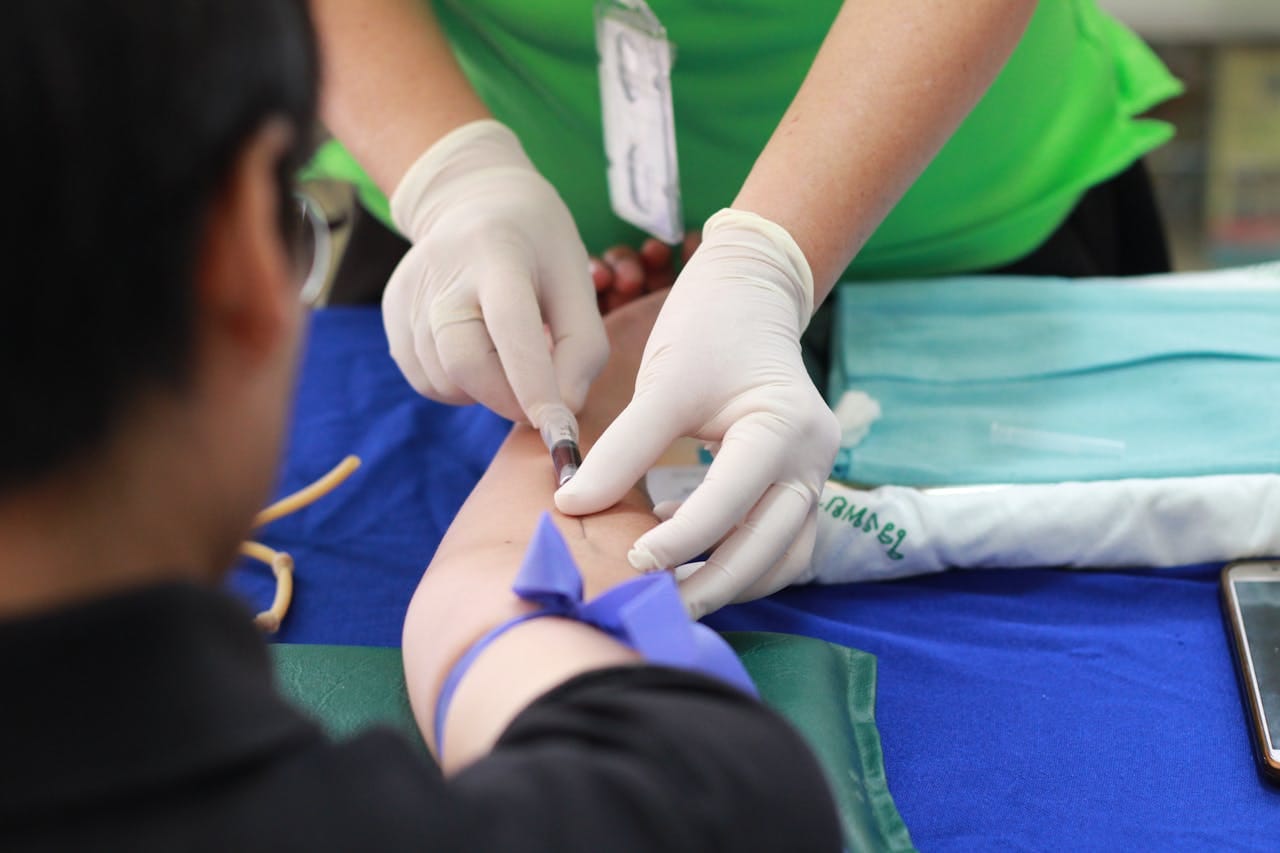

Here’s something most people don’t realize: medicine isn’t just about connecting symptoms to diseases. It’s about ruling things out, running tests, and using clinical judgment to make decisions.

This process is called differential diagnosis, and it’s the backbone of safe medical care.

AI doesn’t do that. It reacts. It gives you the first thing that matches your input — like a very smart autocomplete.

That’s why I’ve seen cases where ChatGPT told someone they had cancer because they mentioned fatigue and weight loss — two common symptoms that could also mean thyroid issues, depression, or even sleep deprivation.

It’s not that the AI is wrong. It’s that it doesn’t know when to stop.

Real Stories, Real Dangers

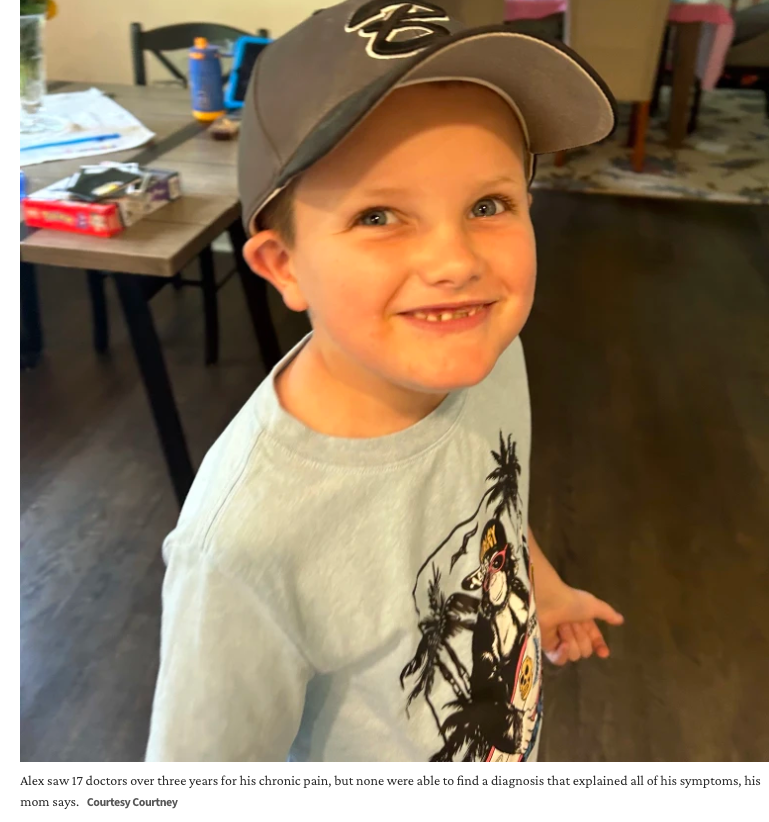

A few weeks ago, a young man in Iraq experienced abdominal pain. Instead of seeing a doctor, he asked an AI assistant. Within minutes, he got a medication recommendation.

Hours later, he was dead.

This isn’t an isolated incident.

I’ve had friends call me in panic after their kids used AI to interpret blood test results. One almost started chemotherapy for a rare disease AI suggested — until we tested for hepatitis and found the real cause.

AI doesn’t panic. It doesn’t hesitate. It gives you an answer — and it gives it with confidence.

Which makes it dangerously persuasive.

Why This Is Worse Than Google

Google used to be the go-to place for symptom searching. We all did it. Type in “headache and nausea,” and suddenly you’re convinced you have a brain tumor.

But AI is different.

Google gave you a list of possibilities. Some were scary, some weren’t. You could click around, compare sources, maybe even laugh at how ridiculous some articles sounded.

AI gives you one answer, wrapped up in a neat sentence. It feels personal. It feels authoritative.

And that’s exactly why it’s more dangerous.

AI Isn’t Just a Medical Problem

The same pattern plays out everywhere.

As a horse riding instructor, I see people online swearing that horses shouldn’t eat alfalfa or that barefoot is always better, often citing unverified AI-generated advice. No critical thinking. No fact-checking. Just blind trust.

Same story. Different field.

So What Can You Do?

Here’s my advice, from both a doctor and an AI developer:

1. Use AI as a Starting Point, Not a Final Answer

Think of AI like a library. It can give you information, but it can’t interpret it for your specific situation. Always consult a licensed professional before making health decisions.

2. Be Specific When Asking Questions

Don’t type “I feel tired.” Try “I’ve been feeling fatigued for three weeks, have trouble sleeping, and lost 5 pounds.” Better input leads to better output, but still, only if you know how to interpret it.

3. Fact-Check Everything

If AI tells you something alarming, look it up from reputable sources. Ask your doctor. Don’t take the first answer as gospel.

4. Know Your Limits

Just because you read something doesn’t mean you understand it. Medicine is complex. Biology is messy. AI simplifies too much.

5. Be Skeptical , Especially With Your Health

Your body is unique. Your life matters. Don’t let a machine decide what’s wrong with you without human oversight.

Final Thoughts: AI Can Give You Answers — But Wisdom Comes From Knowing When to Stop

I work with AI every day. I write about it. I teach it. I believe in its potential. But I also know its limits. AI is a just a tool, like a scalpel. In the right hands, it saves lives. In the wrong ones, it cuts deep.

So next time you’re tempted to ask a chatbot about your symptoms, pause.

Ask yourself:

- Am I describing this clearly?

- Do I really understand what the AI is telling me?

- Is this advice meant for me, or just a general case?

- Should I talk to a real person?

Because sometimes, the best answer isn’t the fastest one.

Sometimes, it’s the one that comes from experience, empathy, and expertise — things AI still can’t replicate.

Stay smart. Stay safe. And remember:

AI gives knowledge, not Wisdom! That’s still up to you.